Inyoung Cheong in the WSJ

Security Lab member and UW Law PhD student Inyoung Cheong was quoted in a Wall Street Journal article on AI and intellectual property: “AI Tech Enables Industrial-Scale Intellectual-Property Theft, Say Critics”.

Security and Privacy Research Lab

Paul G. Allen School of Computer Science & Engineering, University of Washington

Security Lab member and UW Law PhD student Inyoung Cheong was quoted in a Wall Street Journal article on AI and intellectual property: “AI Tech Enables Industrial-Scale Intellectual-Property Theft, Say Critics”.

We are so excited about presentations at USENIX Enigma 2023 from two UW Security Lab alums: Lucy Simko (“How Geopolitical Change Causes People to Become (More) Vulnerable”) and Eric Zeng (“Characterizing and Measuring Misleading and Harmful Online Ad Content at Scale”)! Check out their talk videos below:

Congratulations to Alaa Daffalla (Cornell), Lucy Simko (UW Security Lab alumna), Tadayoshi Kohno (UW Security Lab faculty member), and Alexandru Bardas (Kansas) for being recognized with the Honorable Mention in the NSA’s 10th Annual Best Scientific Cybersecurity Paper Competition!! This is a huge honor and important work. You can read the paper, which was published at the IEEE Symposium on Security & Privacy in 2021, here: “Defensive Technology Use by Political Activists During the Sudanese Revolution”.

(Cross-posted from Allen School News, by Kristin Osborne)

IEEE honored Kohno for “contributions to cybersecurity” — an apt reference to Kohno’s broad influence across a variety of domains. As co-director of the Allen School’s Security and Privacy Research Lab and the UW Tech Policy Lab, Kohno has explored the technical vulnerabilities and societal implications of technologies ranging from do-it-yourself genealogy research, to online advertising, to mixed reality.

His first foray into high-profile security research, as a Ph.D. student at the University of California San Diego, struck at the heart of democracy: security and privacy flaws in the software that powered electronic voting machines. What Kohno and his colleagues discovered shocked vendors, elections officials, and other cybersecurity experts.

“Not only could votes and voters’ privacy be compromised by insiders with direct access to the machines, but such systems were also vulnerable to exploitation by outside attackers as well,” said Kohno. “For instance, we demonstrated that a voter could cast unlimited votes undetected, and they wouldn’t require privileged access to do it.”

After he joined the University of Washington faculty, Kohno turned his attention from safeguarding the heart of democracy to an actual heart when he teamed up with other security researchers and physicians to study the security and privacy weaknesses of implantable medical devices. They found that devices such as pacemakers and cardiac defibrillators that rely on embedded computers and wireless technology to enable physicians to non-invasively monitor a patient’s condition were vulnerable to unauthorized remote interactions that could reveal sensitive health information — or even reprogram the device itself. This groundbreaking work earned Kohno and his colleagues a Test of Time Award from the IEEE Computer Society Technical Committee on Security and Privacy in 2019.

“To my knowledge, it was the first work to experimentally analyze the computer security properties of a real wireless implantable medical device,” Kohno recalled at the time, “and it served as a foundation for the entire medical device security field.”

Kohno and a group of his students subsequently embarked on a project with researchers at his alma mater that revealed the security and privacy risks of increasingly computer-dependent automobiles, and in dramatic fashion: by hacking into a car’s systems and demonstrating how it was possible to take control of its various functions.

“It took the industry by complete surprise,” Kohno said in an article published in 2020. “It was clear to us that these vulnerabilities stemmed primarily from the architecture of the modern automobile, not from design decisions made by any single manufacturer … Like so much that we encounter in the security field, this was an industry-wide issue that would require industry-wide solutions.”

Those industry-wide solutions included manufacturers dedicating new staff and resources to the cybersecurity of their vehicles, the development of new national automotive cybersecurity standards, and creation of a new cybersecurity testing laboratory at the National Highway Transportation Safety Administration. Kohno and his colleagues have been recognized multiple times and in multiple venues for their role in these developments, including a Test of Time Award in 2020 from the IEEE Computer Society Technical Committee on Security and Privacy and a Golden Goose Award in 2021 from the American Association for the Advancement of Science. And most importantly, millions of cars — and their occupants — are safer as a result.

Kohno has journeyed into other uncharted territory by exploring how to mitigate privacy and security concerns associated with nascent technologies, from mixed reality to genetic genealogy services. For example, he and Security and Privacy Research Lab co-director Franziska Roesner have collaborated on an extensive line of research focused on safeguarding users’ security and privacy in augmented-reality environments. The results include ShareAR, a suite of developer tools for safeguarding users’ privacy while enabling interactive features in augmented-reality environments. They also worked with partners in the UW Reality Lab to organize a summit for members of academia and industry and issue a report exploring design and regulatory considerations for ensuring the security, privacy and safety of mixed reality technologies. Separately, Kohno teamed up with colleagues in the Molecular Information Systems Lab to uncover how vulnerabilities in popular third-party genetic genealogy websites put users’ sensitive personal genetic information at risk. Members of the same team also demonstrated that DNA sequencing software could be vulnerable to malware encoded into strands of synthetic DNA, an example of the burgeoning field of cyber-biosecurity.

Kohno’s contributions to understanding and mitigating emerging cybersecurity threats extend to autonomous vehicle algorithms, mobile devices, and the Internet of Things. Although projects exposing the hackability of cars and voting machines may capture headlines, Kohno himself is most captivated by the human element of security and privacy research — particularly as it relates to vulnerable populations. For example, he and his labmates recently analyzed the impact of electronic monitoring apps on people subjected to community supervision, also known as “e-carceration.” Their analysis focused on not only the technical concerns but also the experiences of people compelled to use the apps, from privacy concerns to false reports and other malfunctions. Other examples include projects exploring security and privacy concerns of recently arrived refugees in the United States, with a view to understanding how language barriers and cultural differences can impede the use of security best practices and make them more vulnerable to scams, and technology security practices employed by political activists in Sudan in the face of potential government censorship, surveillance, and seizure.

“I chose to specialize in computer security and privacy because I care about people. I wanted to safeguard people against the harms that can result when computer systems are compromised,” Kohno said. “To mitigate these harms, my research agenda spans from the technical — that is, understanding the technical possibilities of adversaries as well as advancing technical approaches to defending systems — to the human, so that we also understand people’s values and needs and how they prefer to use, or not use, computing systems.”

In addition to keeping up with technical advancements that could impact privacy and security, Kohno is also keen to push the societal implications of new technologies to the forefront. To that end, he and colleagues have investigated a range of platforms and practices, from the development of design principles that would safeguard vulnerable and marginalized populations to understanding how online political advertising contributes to the spread of misinformation, to educate and support researchers and developers of these technologies. He has also attempted to highlight the ethical issues surrounding new technologies through a recent foray into speculative and science fiction writing. For example, his self-published novella “Our Reality” explores how mixed reality technologies designed with a default user in mind can have real-world consequences for people’s education, employment, access to services, and even personal safety.

“It’s important as researchers and practitioners to consider the needs and concerns of people with different experiences than our own,” Kohno said. “I took up fiction writing for the joy of it, but also because I wanted to enable educators and students to explore some of the issues raised by our research in a more accessible way. Instead of identifying how a technology might have gone wrong, I want to help people focus from the start on answering the question, ‘how do we get this right?’”

Congratulations to Security Lab PhD student Michael Flanders and faculty member David Kohlbrenner and their co-authors for winning 2nd place in the CSAW 2022 Applied Research Competition!! Their paper “Augury: Using Data Memory-Dependent Prefetchers to Leak Data at Rest” appeared at the IEEE Symposium on Security & Privacy 2022.

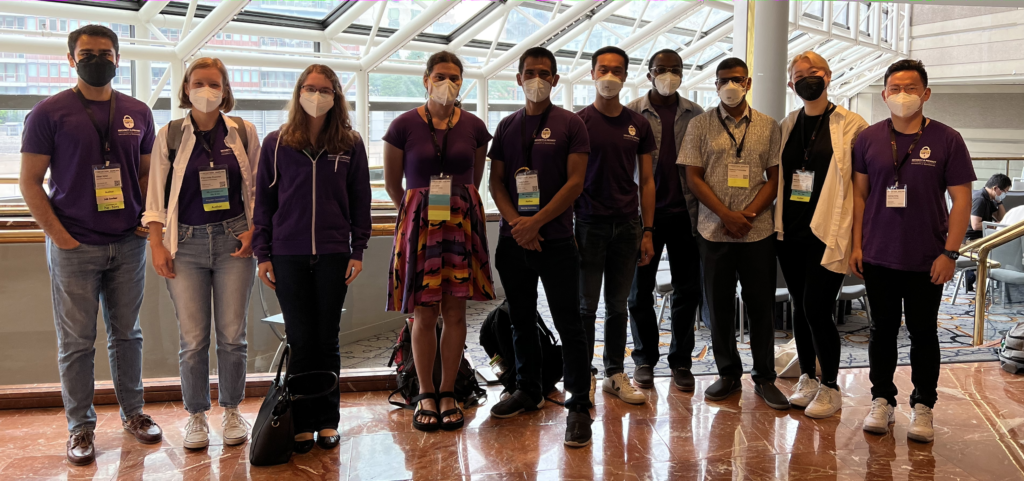

Many members of the UW Security and Privacy Research Lab were thrilled last week to finally re-join our broader research community in person in Boston, at SOUPS and USENIX Security 2022. It was fantastic to see some of our alumni, talk in person with current and future collaborators, meet new members of the community, catch up with old and new friends, and more!

Our members presented a great set of talks across both conferences:

(Cross-posted from Allen School News, by Kristin Osborne)

For people around the world, technology eases the friction of everyday life: bills paid with a few clicks online, plans made and sometimes broken with the tap of a few keys, professional and social relationships initiated and sustained from anywhere at the touch of a button. But not everyone experiences technology in a positive way, because technology — including built-in safeguards for protecting privacy and security — isn’t designed with everyone in mind. In some cases, the technology community’s tendency to develop for a “default persona” can lead to harm. This is especially true for people who, whether due to age, ability, identity, socioeconomic status, power dynamics or some combination thereof, are vulnerable to exploitation and/or marginalized in society.

Researchers in the Allen School’s Security & Privacy Research Lab have partnered with colleagues at the University of Florida and Indiana University to provide a framework for moving technology design beyond the default when it comes to user security and privacy. With a $7.5 million grant from the National Science Foundation through its Secure and Trustworthy Cyberspace (SaTC) Frontiers program, the team will blend computing and the social sciences to develop a holistic and equitable approach to technology design that addresses the unique needs of users who are underserved by current security and privacy practices.

“Technology is an essential tool, sometimes even a lifeline, for individuals and communities. But too often the needs of marginalized and vulnerable people are excluded from conversations around how to design technology for safety and security,” said Allen School professor and co-principal investigator Franziska Roesner. “Our goal is to fundamentally change how our field approaches this question to center the voices of marginalized and vulnerable people, and the unique security and privacy threats that they face, and to make this the norm in future technology design.”

To this end, Roesner and her collaborators — including Allen School colleague and co-PI Tadayoshi Kohno — will develop new security and privacy design principles that focus on mitigating harm while enhancing the benefits of technology for marginalized and vulnerable populations. These populations are particularly susceptible to threats to their privacy, security and even physical safety through their use of technology: children and teenagers, LGBTQ+ people, gig and persona workers, people with sensory impairments, people who are incarcerated or under community supervision, and people with low socioeconomic status. The team will tackle the problem using a three-prong approach, starting with an evaluation of how these users have been underserved by security and privacy solutions in the past. They will then examine how these users interact with technology, identifying both threats and benefits. Finally, the researchers will synthesize what they learned to systematize design principles that can be applied to the development of emerging technologies, such as mixed reality and smart city technologies, to ensure they meet the privacy and security needs of such users.

The researchers have no intention of imposing solutions on marginalized and vulnerable communities; a core tenet of their proposal is direct consultation and collaboration with affected people throughout the duration of the project. They will accomplish this through both quantitative and qualitative research that directly engages communities in identifying their unique challenges and needs and evaluating proposed solutions. The team will apply these insights as it explores how to leverage or even reimagine technologies to address those challenges and needs while adhering to overarching security and privacy goals around the protection of people, systems, and data.

The team’s approach is geared to ensuring that the outcomes are relevant as well as grounded in rigorous scientific theory. It’s a methodology that Roesner, Kohno, and their colleagues hope will become ingrained in the privacy and security community’s approach to new technologies — but they anticipate the impact will extend far beyond their field.

“In addition to what this will mean in terms of a more inclusive approach to designing for security and privacy, one of the aspects that I’m particularly excited about is the potential to build a community of researchers and practitioners who will ensure that the needs of marginalized and vulnerable users will be met over the long term,” said Kohno. “Our work will not only inform technology design, but also education and government policy. The impact will be felt not only in the research and development community but also society at large.”

Kohno and Roesner are joined in this work by PI Kevin Butler and co-PIs Eakta Jain and Patrick Traynor at the University of Florida, co-PIs Kurt Hugenberg and Apu Kapadia at Indiana University, and Elissa Redmiles, CEO & Principal Researcher at Human Computing Associates. The team’s proposal, “Securing the Future of Computing for Marginalized and Vulnerable Populations,” is one of three projects selected by NSF in its latest round of SaTC Frontiers awards worth a combined $24.5 million. The other projects focus on securing the open-source software supply chain and extending the “trusted execution environment” principle to secure computation in the cloud.

Read the NSF announcement here and the University of Florida announcement here.

The UW Security and Privacy Research Lab is incredibly excited to congratulate our many graduates this year! It has been a tough couple of years for everyone, and our BS, MS, PhD, and Postdoc graduates have nevertheless conducted incredible research and contributed to a great lab community. We will miss you all, and we can’t wait to see where your careers take you!

This year’s graduates include:

Congratulations, everyone!!! We are so proud of you!

Security lab faculty member David Kohlbrenner and collaborators announced the Hertzbleed Attack today. The team found a way to mount remote timing attacks on constant-time cryptographic code running on modern x86 processors (see Twitter thread). From the website: “Hertzbleed is a new family of side-channel attacks: frequency side channels. In the worst case, these attacks can allow an attacker to extract cryptographic keys from remote servers that were previously believed to be secure.” The Hertzbleed paper will appear in the 31st USENIX Security Symposium. Congratulations to the team!

(Cross-posted from UW News, by Sarah McQuate and Rebecca Gourley)

UW researchers found that political ads during the 2020 election season used multiple concerning tactics, including posing as a poll to collect people’s personal information or having headlines that might affect web surfers’ views of candidates.University of Washington

Online advertisements are found frequently splashed across news websites. Clicking on these banners or links provides the news site with revenue. But these ads also often use manipulative techniques, researchers say.

University of Washington researchers were curious about what types of political ads people saw during the 2020 presidential election. The team looked at more than 1 million ads from almost 750 news sites between September 2020 and January 2021. Of those ads, almost 56,000 had political content.

Political ads used multiple tactics that concerned the researchers, including posing as a poll to collect people’s personal information or having headlines that might affect web surfers’ views of candidates.

The researchers presented these findings Nov. 3 at the ACM Internet Measurement Conference 2021.

“The election is a time when people are getting a lot of information, and our hope is that they are processing it to make informed decisions toward the democratic process. These ads make up part of the information ecosystem that is reaching people, so problematic ads could be especially dangerous during the election season,” said senior author Franziska Roesner, UW associate professor in the Paul G. Allen School of Computer Science & Engineering.

The team wondered if or how ads would take advantage of the political climate to prey on people’s emotions and get people to click.

“We were well positioned to study this phenomenon because of our previous research on misleading information and manipulative techniques in online ads,” said Tadayoshi Kohno, UW professor in the Allen School. “Six weeks leading up to the election, we said, ‘There are going to be interesting ads, and we have the infrastructure to capture them. Let’s go get them. This is a unique and historic opportunity.’”

The researchers created a list of news websites that spanned the political spectrum and then used a web crawler to visit each site every day. The crawler scrolled through the sites and took screenshots of each ad before clicking on the ad to collect the URL and the content of the landing page.

The team wanted to make sure to get a broad range of ads, because someone based at the UW might see a different set of ads than someone in a different location.

“We know that political ads are targeted by location. For example, ads for Washington candidates will only be featured to viewers browsing from the state of Washington. Or maybe a presidential campaign will have more ads featured in a swing state,” said lead author Eric Zeng, UW doctoral student in the Allen School.

“We set up our crawlers to crawl from different locations in the U.S. Because we didn’t actually have computers set up across the country, we used a virtual private network to make it look like our crawlers were loading the sites from those locations.”

The researchers initially set up the crawlers to search news sites as if they were based in Miami, Seattle, Salt Lake City and Raleigh, North Carolina. After the election, the team also wanted to capture any ads related to the Georgia special election and the Arizona recount, so two crawlers started searching as if they were based in Atlanta and Phoenix.

The team continued crawling sites throughout January 2021 to capture any ads related to the Capitol insurrection.

Some political ads posed as a poll to collect people’s personal information.University of Washington

The researchers used natural language processing to classify ads as political or non-political. Then the team went through the political ads manually to further categorize them, such as by party affiliation, who paid for the ad or what types of tactics the ad used.

“We saw these fake poll ads that were harvesting personal information, like email addresses, and trying to prey on people who wanted to be politically involved. These ads would then use that information to send spam, malware or just general email newsletters,” said co-author Miranda Wei, UW doctoral student in the Allen School. “There were so many fake buttons in these ads, asking people to accept or decline, or vote yes or no. These things are clearly intended to lead you to give up your personal data.”

Ads that appeared to be polls were more likely to be used by conservative-leaning groups, such as conservative news outlets and nonprofit political organizations. These ads were also more likely to be featured on conservative-leaning websites.

The most popular type of political ad was click-bait news articles that often mentioned top politicians in sensationalist headlines, but the articles themselves contained little substantial information. The team observed more than 29,000 of these ads, and the crawlers often encountered the same ad multiple times. Similar to the fake poll ads, these were also more likely to appear on right-leaning sites.

“One example was a headline that said, ‘There’s something fishy in Biden’s speeches,’” said Roesner, who is also the co-director of the UW Security and Privacy Research Lab. “I worry that these articles are contributing to a set of evidence that people have amassed in their minds. People probably won’t remember later where they saw this information. They probably didn’t even click on it, but it’s still shaping their view of a candidate.”

Click-bait news articles often mentioned top politicians in sensationalist headlines, but the articles themselves contained little substantial information.University of Washington

The researchers were surprised and relieved, however, to find a lack of ads containing explicit misinformation about how and where to vote, or who won the election.

“To their credit, I think the ad platforms are catching some misinformation,” Zeng said. “What’s getting through are ads that are exploiting the gray areas in content and moderation policies, things that seem deceptive but play to the letter of the law.”

The world of online ads is so complicated, the researchers said, that it’s hard to pinpoint exactly why or how certain ads appear on specific sites or are viewed by specific viewers.

“Certain ads get shown in certain places because the system decided that those would be the most lucrative ads in those spots,” Roesner said. “It’s not necessarily that someone is sitting there doing this on purpose, but the impact is still the same — people who are the most vulnerable to certain techniques and certain content are the ones who will see it more.”

To protect computer users from problematic ads, the researchers suggest web surfers should be careful about taking content at face value, especially if it seems sensational. People can also limit how many ads they see by getting an ad blocker.

Theo Gregersen, a UW undergraduate student studying computer science is also a co-author on this paper. This research was funded by the National Science Foundation, the UW Center for an Informed Public, and the John S. and James L. Knight Foundation.

For more information, contact badads@cs.washington.edu.