(Reposted from UW News.)

In a survey of 315 people, respondents largely found creating and sharing sexually explicit “deepfakes” unacceptable. But far fewer respondents strongly opposed seeking out these media. John M. Smit/Unsplash

Content warning: This post contains details of sharing intimate imagery without consent that may be disturbing to some readers.

While much attention on sexually explicit “deepfakes” has focused on celebrities, these non-consensual sexual images and videos generated with artificial intelligence harm people both in and out of the limelight. As text-to-image AI models grow more sophisticated and easier to use, the volume of such content is only increasing. The escalating problem led Google to announce last week that it will work to filter out these deepfakes in search results, and the Senate recently passed a bill allowing victims to seek legal damages from deepfake creators.

Given this rising attention, researchers at the University of Washington and Georgetown University wanted to better understand public opinions about the creation and dissemination of what they call “synthetic media.” In a survey, 315 people largely found creating and sharing synthetic media unacceptable. But far fewer respondents strongly opposed seeking out these media — even when they portrayed sexual acts.

Yet previous research has shown that other people viewing image-based abuse, such as nudes shared without consent, harms the victims significantly. And in nearly all states, including Washington, creating and sharing such nonconsensual content is a crime.

“Centering consent in conversations about synthetic media, particularly intimate imagery, is key as we look for ways to reduce its harms — whether that’s through technology, public messaging or policy,” said lead author Natalie Grace Brigham, who was a UW master’s student in the Paul G. Allen School of Computer Science & Engineering while completing this research. “In a synthetic nude, it’s not the subject’s body — as we’ve typically considered it — that’s being shared. So we need to expand our norms and ideas about consent and privacy to account for this new technology.”

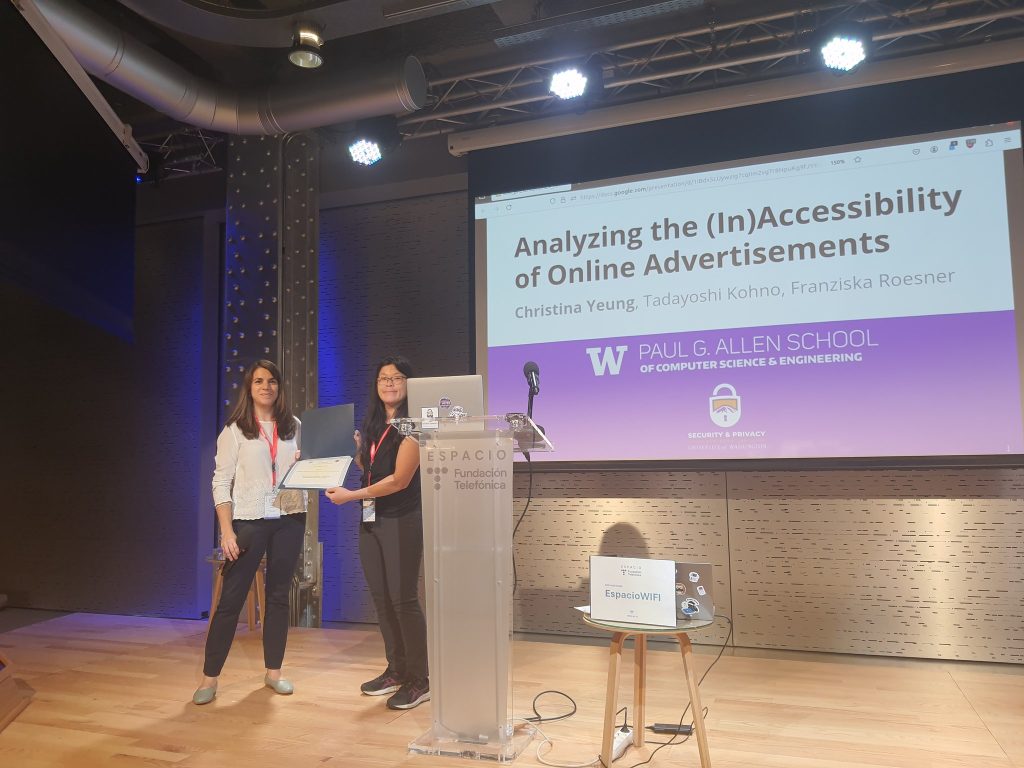

The researchers will present their findings Aug. 13 at the 20th Symposium on Usable Privacy and Security in Philadelphia.

“In some sense, we’re at a new frontier in how people’s rights to privacy are being violated,” said co-senior author Tadayoshi Kohno, a UW professor in the Allen School. “These images are synthetic, but they still are of the likeness of real people, so seeking them out and viewing them is harmful for those people.”

The survey, which the researchers conducted online through Prolific, a site that pays people to respond on a variety of topics, asked U.S. respondents to read vignettes about synthetic media. The team altered variables in these scenarios like who created the synthetic media (an intimate partner, a stranger), why they created it (for harm, entertainment or sexual pleasure), and what action was shown (the subject performing a sexual act, playing a sport or speaking).

The respondents then ranked various actions around the scenarios — creating the video, sharing in different ways, seeking it out — from “totally unacceptable” to “totally acceptable” and explained their responses in a sentence or two. Finally, they filled out surveys on consent and demographic information. The respondents were over the age of 18 and were 50% women, 48% men, 2% non-binary and 1% agender.

The survey respondents ranked various actions around the synthetic media scenarios. The responses to each are graphed above.

Overall, respondents found creating and sharing synthetic media unacceptable. Their median totally unacceptable or somewhat unacceptable ratings were 90% for creating these media and 94% for sharing them. But the median of unacceptable ratings for seeking out synthetic media was only 53%.

Men were more likely than respondents of other genders to find creating and sharing synthetic media acceptable, while respondents who had favorable views of sexual consent were more likely to find these actions unacceptable.

“There has been a lot of policy talk about preventing synthetic nudes from getting created. But we don’t have good technical tools to do that, and we need to simultaneously protect consensual use cases,” said co-senior author Elissa M. Redmiles, an assistant professor of computer science at Georgetown University. “Instead, we need to change social norms. So we need things like deterrence messaging on searches — we’ve seen that be effective at reducing the viewing of child sexual abuse images — and consent-based education in schools focused on this content.”

Respondents found scenarios in which intimate partners created synthetic media of people playing sports or speaking for the intent of entertainment the most acceptable. Conversely, nearly all respondents found it totally unacceptable to create and share sexual deepfakes of intimate partners with the intent of harm.

Respondents’ reasoning varied. Some found synthetic media unacceptable only if the outcome was harmful. For example, one respondent wrote, “It’s not harming me or blackmailing me… [a]s long as it doesn’t get shared I think it’s okay.” Others, though, centered their right to privacy and right to consent. “I feel it’s unacceptable to manipulate my image in such a way — my body and how it looks belongs to me,” wrote another.

The researchers note that future work in this space should explore the prevalence of non-consensual synthetic media, the pipelines for how it’s created and shared, and different methods to deter people from creating, sharing and seeking out non-consensual synthetic media.

“Some people argue that AI tools for creating synthetic images will have benefits for society, like for the arts or human creativity,” said co-author Miranda Wei, a doctoral student in the Allen School. “However, we found that most people thought creating synthetic images of others in most cases was unacceptable — suggesting that we still have a lot more work to do when it comes to evaluating the impacts of new technologies and preventing harms.”

This research was funded in part by the National Science Foundation and the Google PhD Fellowship Program.

For more information, contact Brigham at nbrigham@uw.edu, Wei at weimf@cs.washington.edu, Kohno at yoshi@cs.washington.edu and Redmiles at elissa.redmiles@georgetown.edu.