(Cross-posted from Allen School News.)

Reporters contributing to the Panama Papers investigation meet in Munich, Germany to receive training on ICIJ’s research tools. Photo credit: Kristof Clerix

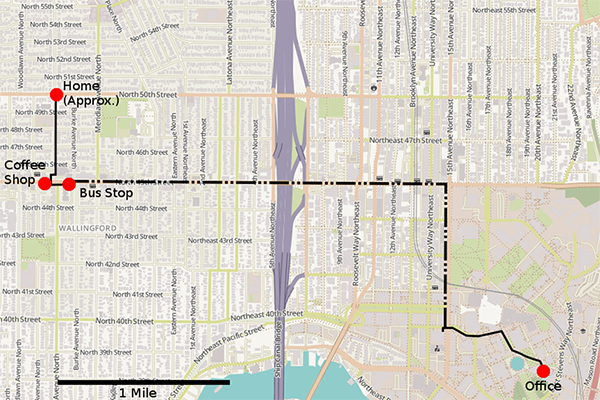

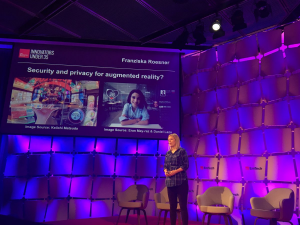

When the Panama Papers story first broke in April 2016, its explosive revelations of a vast and hidden network of offshore shell companies and financial scandals-in-waiting tied to politicians, corporations, banking institutions, and organized crime represented a victory for good, old-fashioned investigative journalism — with a high tech twist. In addition to provoking international outrage, toppling governments, and instigating audits and investigations in more than 70 countries, the story caught the eye of researchers like Allen School professor Franziska Roesner, who — working with a team of researchers from the University of Washington’s Security and Privacy Research Lab and collaborators at Columbia University and Clemson University — has made a study of the security practices of journalists and developed new solutions tailored to the needs of the Fourth Estate.

While the users of secure systems can notoriously be the weakest link, what Roesner and colleagues found in examining the successful Panama Papers investigation was that the users — in this case, the more than 300 reporters spread across six continents working under the auspices of the International Consortium of Investigative Journalists — were, in fact, a source of strength.

“Success stories in computer security are rare,” noted Roesner. “But we discovered that the journalists involved in the Panama Papers project seem to have achieved their security goals.”

The researchers set out to determine how hundreds of journalists with varying degrees of technical acumen were able to securely collaborate on the year-long investigation, which involved 11.5 million leaked documents from Panama-based law firm Mossack Fonseca that implicated individuals and entities at the highest reaches of power. They relied on a combination of survey data from 118 journalists who participated in the investigation, and in-depth, semi-structured interviews with those who designed and implemented the security systems that facilitated global collaboration while protecting those doing the collaborating. The team presented its findings in their paper, “When the Weakest Link Is Strong: Secure Collaboration in the Case of the Panama Papers,” as part of the 26th USENIX Security Symposium in Vancouver, Canada last month.

Allen School professor Franziska Roesner has made a study of journalists’ security needs and practices

Roesner and her colleagues were surprised to discover the extent to which ICIJ was able to strictly and consistently enforce security requirements such as PGP and two-factor authentication — even among those for whom such tools and practices were new. One of the main reasons the operation was a success, the researchers found, came down to utility.

“We found that the tools developed for the project were highly useful and usable, which motivated journalists to use the secure communication platforms provided by the ICIJ,” explained Susan McGregor, a professor at Columbia Journalism School and a principal investigator, along with Kelly Caine of Clemson University’s School of Computing, on the study.

They also found that journalists were motivated by more than sheer usefulness: their sense of community, and responsibility to that community, spurred them to not only tolerate but to embrace the strict security requirements put in place.

“The project leaders frequently communicated the importance of security and mutual trust,” Roesner noted. “This cultivated a strong sense of shared responsibility for the security of not only themselves, but of their colleagues — they were all in this together, and that was a powerful factor in the success of the operation, from a security standpoint.”

It also helped that the ICIJ walked their talk: if a journalist did not have access to a cellphone that could serve as a second factor, the organization purchased and configured one for them. They also made PGP a default tool and ensured everyone had a PGP key, thus taking the guesswork out of evaluating and selecting appropriate tools for themselves.

ICIJ’s approach helped it to avoid a number of known pitfalls when it comes to journalists’ security. Earlier work by Roesner and her collaborators that examined the security and privacy needs and constraints of journalists as well as those of the media organizations that employ them revealed the inadequacy of current tools, which often impede the gathering of information. The researchers found that this often led journalists to create ad-hoc workarounds that may compromise their own security and the security of their sources.

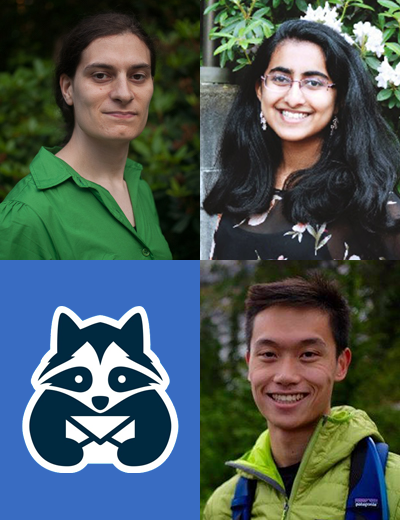

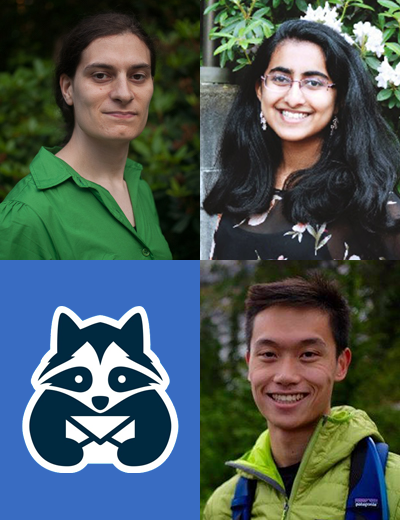

Armed with the lessons learned from those previous studies, Roesner teamed up with Allen School Ph.D. students Ada Lerner (now a faculty member at Wellesley College) and Eric Zeng, and undergraduate student Mitali Palekar to develop Confidante, a usable encrypted email client for journalists and others who require secure electronic communication that aims to improve on traditional PGP tools like those used in the Panama Papers investigation.

“We built Confidante to explore how we could combine strong security with ease of use and minimal configuration. One of our goals was for it to feel, as much as possible, like using regular email,” explained Lerner.

Confidante team members, clockwise from top left: Ada Lerner, Mitali Palekar, and Eric Zeng

“Building it allowed us to get really specific with journalists in our user study, since it was a prototype they could try out and react to — and that allowed us to ask them about the ways in which it did and didn’t meet their needs,” she continued. “It let us more concretely understand what kind of system might be able to provide journalists with strong protections, including reducing user errors that might inadvertently compromise their security.”

Confidante is built on top of Gmail to send and receive messages and Keybase for automatic public/private key management. In a study of a working prototype involving journalists and lawyers, the team found that Confidante enabled users to complete an encrypted email task more quickly, and with fewer errors, compared to an existing email encryption tool. Compatibility with mobile was another factor that met with users’ approval.

“Every journalist and lawyer involved in our user study regularly reads and responds to email on the go, so any encrypted email solution developed for this group must work on mobile devices,” noted Zeng. “As a standalone email app built with modern web technologies, Confidante meets this need, whereas integrated PGP tools like browser extensions do not.”

Some participants observed that using Confidante, with its automated key management, was not that different from sending regular email — suggesting that Roesner and her colleagues had hit the mark when it comes to balancing user preferences and strong security.

“Tools fail in part when the technical community has built the wrong thing, so it’s important for us as computer security researchers to understand user needs and constraints,” observed Roesner. “What the Panama Papers study and Confidante illustrate is that there are ways to help journalists to do their jobs securely as well as effectively — and this is important not just for these individuals and their sources, but for society at large.”

Read the USENIX Security paper to learn more about computer security and the Panama Papers. Visit the Confidante website to try out the prototype and view the publicly available source code from the Allen School research team.

Congratulations to

Congratulations to