(Cross-posted from Allen School News.)

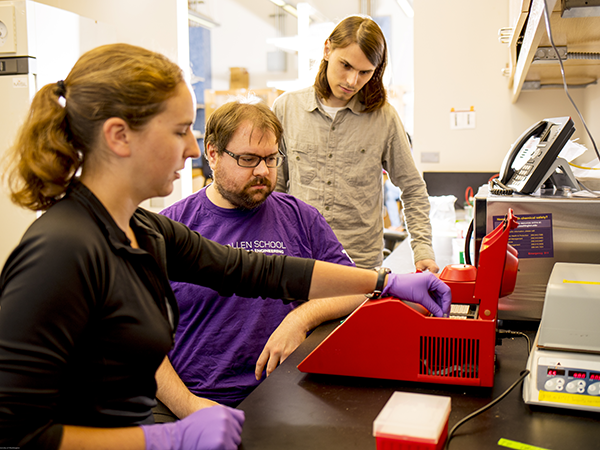

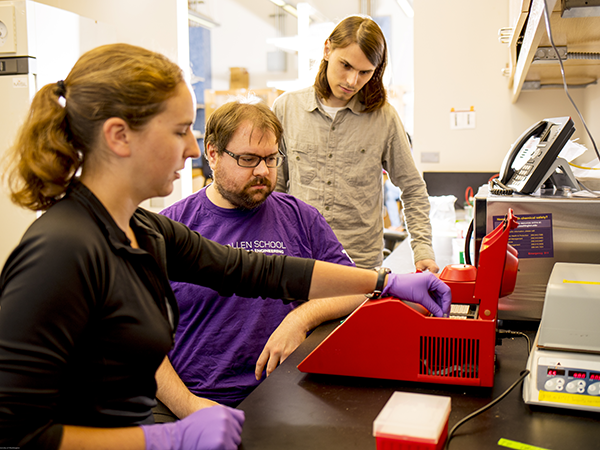

Left to right: Lee Organick, Karl Koscher, and Peter Ney prepare the DNA exploit.

In an illustration of just how narrow the divide between the biological and digital worlds has become, a team of researchers from the Allen School released a study revealing potential security risks in software commonly used for DNA sequencing and analysis — and demonstrated for the first time that it is possible to infect software systems with malware delivered via DNA molecules. The team will present its paper, “Computer Security, Privacy, and DNA Sequencing: Compromising Computers with Synthesized DNA, Privacy Leaks, and More,” at the USENIX Security Symposium in Vancouver, British Columbia next week.

Many open-source systems used in DNA analysis began in the cloistered domain of the research lab. As the cost of DNA sequencing has plummeted, new medical and consumer-oriented services have taken advantage, leading to more widespread use — and with it, potential for abuse. While there is no evidence to indicate that DNA sequencing software is at imminent risk, the researchers say now would be a good time to address potential vulnerabilities.

“One of the big things we try to do in the computer security community is to avoid a situation where we say, ‘Oh shoot, adversaries are here and knocking on our door and we’re not prepared,’” said professor Tadayoshi Kohno, co-director of the Security and Privacy Research Lab, in a UW News release.

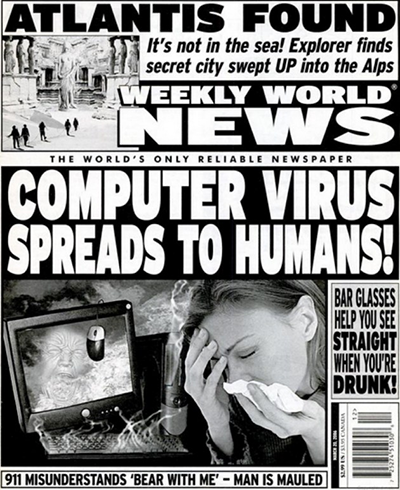

Researcher Tadayoshi Kohno wondered if what this tabloid headline suggested would work in reverse: Could DNA be used to deliver a virus to a computer?

Kohno and Karl Koscher (Ph.D., ’14), who works with Kohno in the Security and Privacy Research Lab, have been down this road before — literally as well as figuratively. In 2010, they and a group of fellow UW and University of California, San Diego security researchers demonstrated that it was possible to hack into modern automobile systems connected to the internet. They have also explored potential security vulnerabilities in implantable medical devices and household robots.

Kohno conceived of this latest experiment after he came across an online discussion about a tabloid headline in which a person was alleged to have been infected by a computer virus. While he wasn’t about to take that fantastical storyline at face value, Kohno was curious whether the concept might work in reverse.

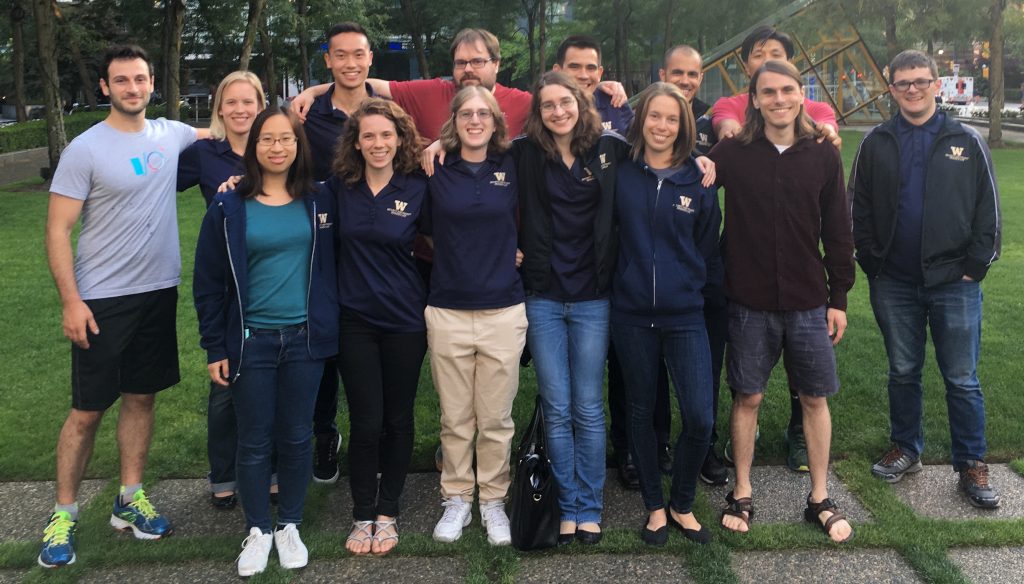

Kohno, Koscher, and Allen School Ph.D. student Peter Ney — representing the cybersecurity side of the equation — teamed up with professor Luis Ceze and research scientist Lee Organick of the Molecular Information Systems Lab, where they are working on an unrelated project to create a DNA-based storage solution for digital data. The group decided not only would they analyze existing software for vulnerabilities; they would attempt to exploit them.

“We wondered whether under semi-realistic circumstances it would be possible to use biological molecules to infect a computer through normal DNA processing,” Ney said.

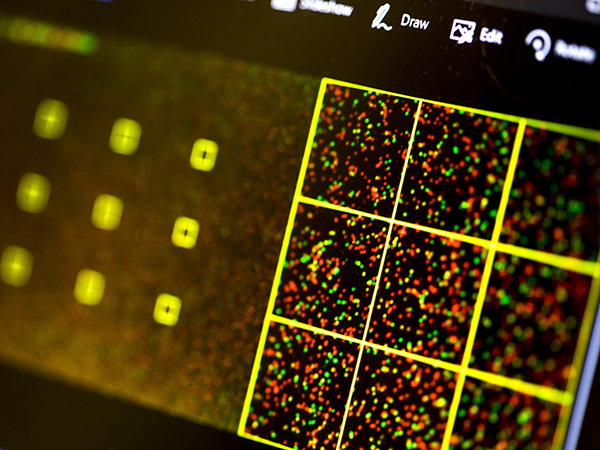

As it turns out, it is possible. The team introduced a known vulnerability into software they would then use to analyze the DNA sequence. They encoded a malicious exploit within strands of synthetic DNA, and then processed those strands using the compromised software. When they did, the researchers were able to execute the encoded malware to gain control of the computer on which the sample was being analyzed.

While there are a number of physical and technical challenges someone would have to overcome to replicate the experiment in the wild, it nevertheless should serve as a wake-up call for an industry that has not yet had to contend with significant cybersecurity threats. According to Koscher, there are steps companies and labs can immediately take to improve the security of their DNA sequencing software and practice good “security hygiene.”

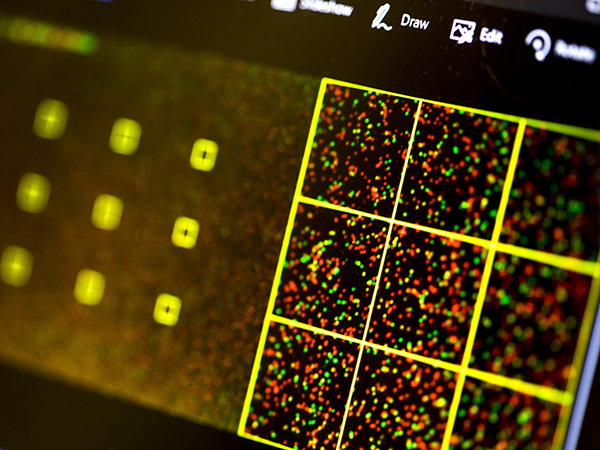

This output from a DNA sequencing machine includes the team’s exploit.

“There is some really low-hanging fruit out there that people could address just by running standard software analysis tools that will point out security problems and recommend fixes,” he suggested. For the longer term, the group’s recommendations include employing adversarial thinking in setting up new processes, verifying the source of DNA samples prior to processing, and developing the means to detect malicious code in DNA.

The team emphasized that people who use DNA sequencing services should not worry about the security of their personal genetic and medical information — at least, not yet. “Even if someone wanted to do this maliciously, it might not work,” Organick told UW News.

While Ceze admits he is concerned by what the team discovered during their analysis, it is a concern that is largely rooted in conjecture at this point.

“We don’t want to alarm people,” Ceze pointed out. “We do want to give people a heads up that as these molecular and electronic worlds get closer together, there are potential interactions that we haven’t really had to contemplate before.”

Visit the project website and read the UW News release to learn more.

Also see coverage in Wired, The Wall Street Journal, MIT Technology Review, The Atlantic, TechCrunch, Mashable, Gizmodo, ZDNet, GeekWire, Inverse, IEEE Spectrum, and TechRepublic.

Today Security Lab PhD student

Today Security Lab PhD student  The Security and Privacy Lab is excited to welcome postdoctoral researcher

The Security and Privacy Lab is excited to welcome postdoctoral researcher  Congratulations to Prof. Dr.

Congratulations to Prof. Dr.

Congratulations to Dr.

Congratulations to Dr.